Musings on The AI Dilemma 💗👾

How to bring ideas to life in a good way?

Happy buzzy spring! alas, I miss the stillness of winter already… 🤭

Whew, there’s a lot going on right now. Again. For the last month and a half I’ve taken many of those involunary deep breaths; you know, the type of sighs that naturally release upon hearing, say, challenging news. It’s a similar feeling tone to March 2020 when it seemed like the world was about to change in a myirad of ways. The uncertainty in awareness of invisible exponential changes accelerating all around. What did you learn about yourself in the last cycle that you might want to bring into this new one?

I find myself in an interesting position, working with a small team at The Center for Humane Technology that leaders in AI, business, government, media, education and beyond are looking to for guidance. We’ve quickly done our best to play our part: make sense of what’s going on, craft messaging that empowers people to make wise and courageous choices from wherever they are positioned in the web of life, and convene power nodes to coordinate an effective response to what we see as a “race to recklessness” amongst major players in the space.

We aim to close the gap between what the world hears publicly about AI from splashy CEO presentations, and what the people who are closest to the risks and harms inside AI labs are telling us. We’ve been working to translate their concerns into a cohesive story and share with major institutions. Our co-founders Tristan Harris and Aza Raskin have been busy discussing A.I. risk in Forbes, NPR, Fox, Washington Post, NBC Nightly News, The Information, Wired, and New York Times in an op-ed with Yuval Noah Harari.

We recenlty rleased a podcast called “The AI Dilemma” that summarizes our core messages. It’s an abridged version of a live briefing we gave in SF in early March (DM for the video). Note: things are moving so fast in this space that some info is already outdated, or our stance has changed slighlty, but the core essence persists:

🎙️Listen to The AI Dilemma

Take a breath. It’s a lot. And with any big issue, there’s a lot left unsaid. About the beautiful possibilities of this new class of technology, issues with bias and ownership and governance, capitalism... we centered the unique frames that we felt were ours to contribute for the audience we have access to, given what we’ve learned from years of experience learning from and addressing The Social Dilemma.

tldr:

When it comes to deploying humanity’s most consequential technology, the race to dominate the market should not set the speed. We should move at whatever speed enables us to get this right. A.I. systems with the power of GPT-4 and beyond should not be entangled with the lives of billions at a pace faster than cultures can safely absorb them. To that end, we’ve co-signed Pause Giant AI Experiments: An Open Letter with over 1,000 leading experts in the field.

A.I. indeed has the potential to help us defeat cancer, discover lifesaving drugs and invent solutions for our climate and energy crises. There are innumerable other benefits we cannot begin to imagine. But it doesn’t matter how high the skyscraper of benefits A.I. assembles if the foundation collapses. When godlike powers are matched with the commensurate responsibility and control, we can realize the benets that A.I. promises.

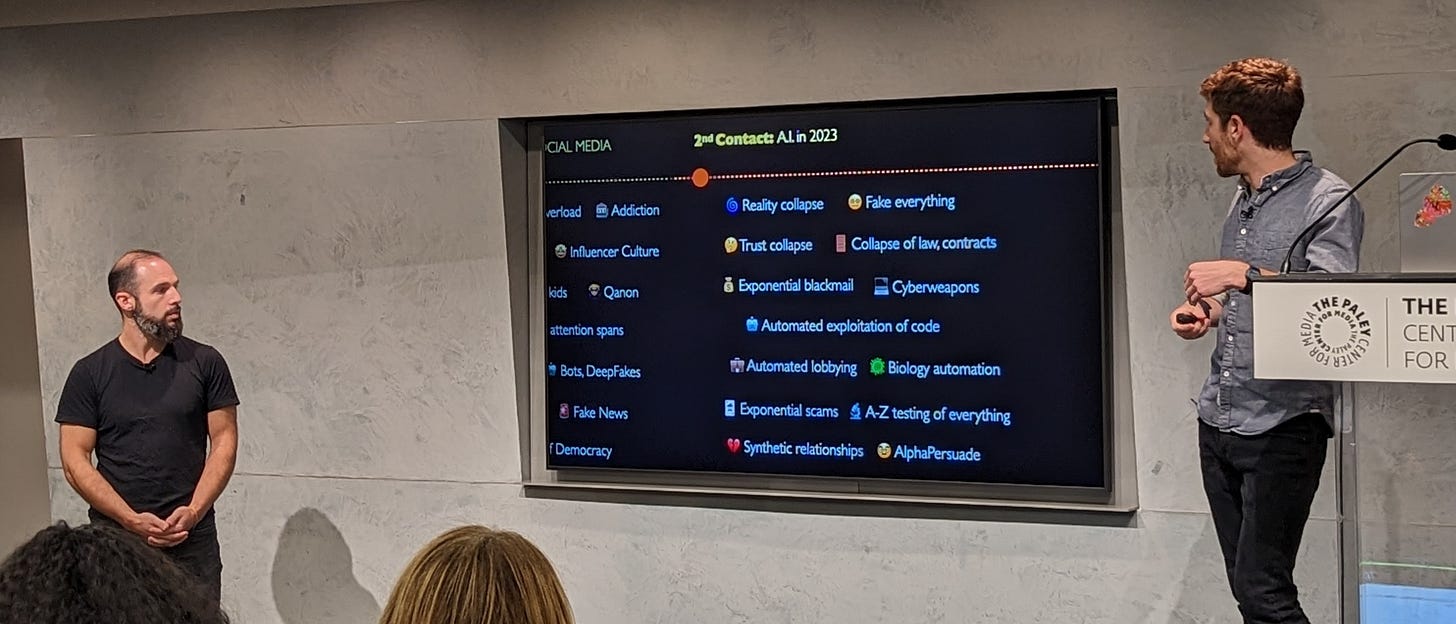

Social media was the first contact between A.I. and humanity, and humanity lost. First contact has given us the bitter taste of things to come. We cannot afford to lose again. But on what basis should we believe humanity is capable of aligning these new forms of A.I. to our benefit? If we continue with business as usual, the new A.I. capacities will again be used to gain profit and power, even if it inadvertently destroys the foundations of our society.

Please consider sharing the podcast others who you think might benefit, especially those working on AI in any capacity. Feel free to respond with any reactions. What are you seeing that we might be missing or off the mark on? I’m feeling really proud and grateful to be playing for this team, contributing my little bit to the conversation.

💭 What I’m Wondering About

There are endless angles we can look at AI from. I’m still developing my own thoughts, trying to hold a spacious beginners mind and accept that my views will change, while also standing firm I know to be true in what can feel like a real-time battlefield. Integrating the ideas of experts with hard earned personal lessons around sensitivity, slowness, cycles, our inner worlds. Inquiring about my unique role in all of it. What’s yours?

Social Networking - Having just moved to Berkeley, I find myself traversing social scenes of folks working on all different sides of AI, from reserach and safety to entrepreneurship and investment. IRL and Signal chats, dance floor and conference hall. Networking! I like networking. It’s fun, easy, and sometimes surreal. e.g. last Burning Man I was connected with my “soul mate” through the Costco Soulmate Trading Outlet, who I finally met IRL the other day and learned he lives around the corner, works on responsbile AI, and holds similar visions to me! I love people thinking and tinkering at the intersection of tech and business, philosophy and systems, psychology and spirituality. They are some of my closest people. Feel free to intro me to more of them.

Wisdom Gap - AI is such a mirror for ourselves and society. I’ve been thinking a lot about Sam Altman (CEO of OpenAI) and related tech leaders, trying to diagnose the gap between their leadership and sensemaking, and what we at CHT argue would be adequate in the podcast above. What are some of their core wounds, blindspots, things they need to learn and unlearn? What would it take to see that their incrementally better business moves (which to their credit, goes further than nearly any tech company of that scale - 100x cap on investor returns, governed by nonprofit, clause in charter on prioritizing safety collab > racing, building in the open, Sam & co do lots of “transformation” work…) are still inadequate for the innovation they are stewarding, and feel motivated to close that wisdom gap? To be more present and sensitive to the risks and impact; to bind power with commensurate responsbility. It’s an exquisite pressure test for the humane innovation playbook.

Training Humaneness - Which is why I’m partciulary jazzed about how my networking around the AI Dilemma integrates with a new stream of my professional work: building out personal development training for technologists. Picking up where I left off two years ago with The Path of the Humane Technologist essay. More to come on this, but please feel free to write me if you have ideas to share on inner education and practices for outer change. It has been said that we can’t have the power of Gods without the wisdom, love, and prudence of Gods. My argument is we don’t get humane technology without humane technologists (+ ideas, models, organizations, systems), and that the path(s) of becoming a more humane technologist is a rich and meaningful lifepath to embark on, now.

Village Elders - It makes me feel like my / our role in the village is more of the wise elder, watching the kids playing with fire, and helping to guide them towards more safe, resonsible, mature actions–service of the collective wellbeing of the tribe (+ ideally dissolve the ego through initiatory experiences - the child who is not embraced by the village will burn it down to feel its warmth). We see things in a way others don’t because of where we’ve focused our attention. We’re calling our family members up to higher standards to meet the complexity and scale of our existential challenges. Calling out the race dynamics, while calling in the leaders. I look forward to creating conversation and experiences with those working on AI and committed to closing their wisdom gap in order to bring these innovations into the world in a good way.

Psychospiritual - There are psycholigical, spiritual, and mythic dimensions to the AI Dilemma that don’t make the mainstream narratives. I’m poking around for clues. It has been said that myths are not about something that happened in the past, but about something that keeps happening. To that end, I personally feel lots of hope, that there is a natural response happening through life on Earth in order to meet the AI Dilemma.

Business as Usual - My biggest near term fear is that AI is going to amplify and accelerate “business as usual.” The worst parts of our economy and society are likely going to get more extractive, addicting, harmful (this is an excellent time to harden your online security practices). This will be felt most acutely by those who are already most vulnerable, and likely by many who have yet to personally feel the weight of oppressive systems. Which ought to illuminate these flawed systems in a bigger way, and hopefully inspire sorely needed reform and transformation (e.g. UBI). It’s going to be quite an election year….

Whew. Happy Nisan, a month of liberation and miracles. I’m gearing up for Passover in the Desert next week. In these dynamic times, I’m thinking about how I can be more humane in my daily life. Give to myself and others, say the challenigng thing, stop and smell the flowers. Those good and beautiful things that machines aren’t capable of but make this mysterious life worth living.

Lots of love,

Andrew